The history of computers is more than 200 years old. In this article we have told a brief history of computers. First theorized by mathematicians and entrepreneurs, mechanical counting machines were designed and built during the 19th century to solve increasingly complex number-crunching challenges. The history of computers began with primitive designs in the early 19th century and went on to change the world during the 20th century.

Nowadays, computers are almost indistinguishable from 19th-century designs, such as Charles Babbage’s Analytical Engine – or even the massive 20th-century computers that occupied entire rooms, such as electronic numerical integrators and calculators.

History of computer in ancient romans to 1662:

Ancient Roman:

The ancient Romans developed an Abacus, the first “machine” for calculating. While it predates the Chinese abacus we do not know if it was the ancestor of that Abacus. Counters in the lower groove are 1 x 10n, those in the upper groove are 5 x 10n.

Blaise Pascal(1623-1662):

Blaise Pascal, a French mathematical genius, at the age of 19 invented a machine, which he called the Pascaline that could do addition and subtraction to help his father, who was also a mathematician. Pascal’s machine consisted of a series of gears with 10 teeth each, representing the numbers 0 to 9. As each gear made one turn it would trip the next gear up to make 1/10 of a revolution. This principle remained the foundation of all mechanical adding machines for centuries after his death. The Pascal programming language was named in his honor.

History of computer in 19th century:

1801:

In France, weaver and merchant Joseph Marie Jacquard creates a loom that uses wooden punch cards to automate the design of woven fabrics. Early computers would use similar punch cards.

1822:

English mathematician Charles Babbage invents a steam-driven calculating machine that was able to compute tables of numbers.

1890:

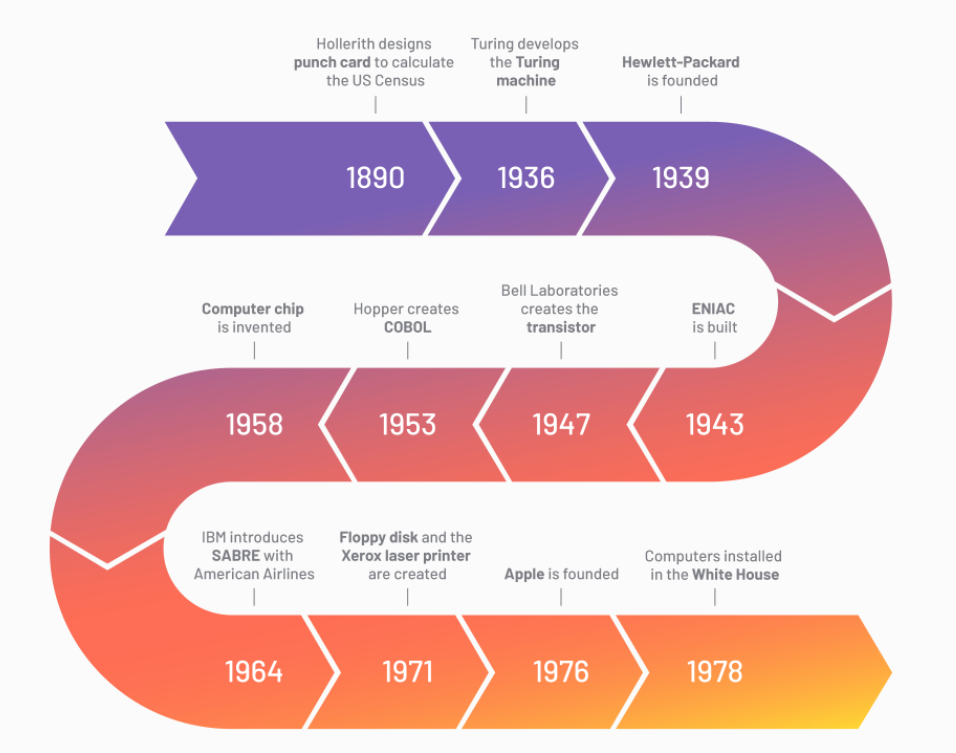

Inventor Herman Hollerith designs the punch card system to calculate the 1880 U.S. census. It took him three years to create, and it saved the government $5 million. He would eventually go on to establish a company that would become IBM.

History of computer in early 20th century:

1931:

Vannevar Bush invents and builds the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer, according to Stanford University.

1936:

British scientist and mathematician Alan Turing developed an idea for a universal machine, which he would call the Turing machine, that would be able to compute anything that is computable. The concept of modern computers was based on his idea.

1937:

At Iowa State University , J.V. Atanasoff, attempts to build the first computer without cams, belts, gears, or shafts.

1939:

Bill Hewlett and David Packard found Hewlett-Packard in a garage in Palo Alto, California. Their first project, the HP 200A Audio Oscillator, would rapidly become a popular piece of test equipment for engineers.

1941:

At Iowa State University J.V. Atanasoff and graduate student Clifford Berry design a computer that can solve 29 equations simultaneously. This is the first time a computer is able to house data within its own memory.

That same year, German engineer Konrad Zuse creates the Z3 computer, which used 2,300 relays, performed floating-point binary arithmetic, and had a 22-bit word length.

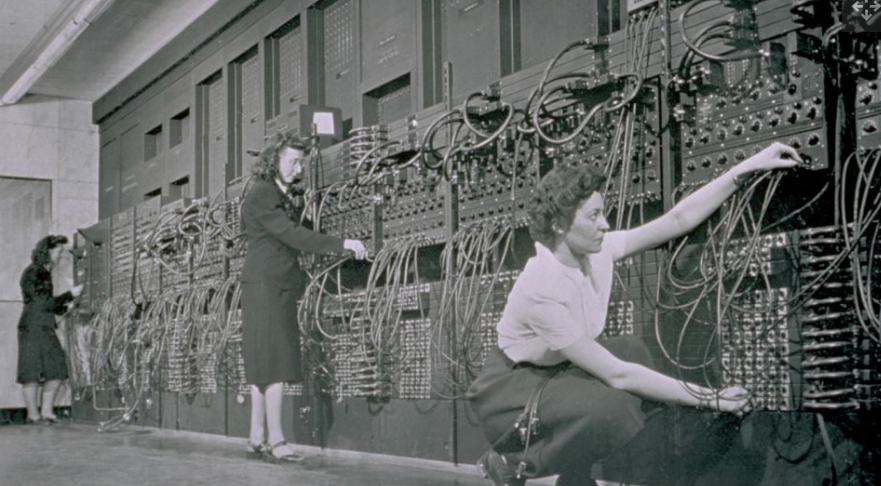

1943:

At the University of Pennsylvania, build an Electronic Numerical Integrator and Calculator (ENIAC). This is considered to be the grandfather of digital computers, as it is made up of 18,000 vacuum tubes and fills up a 20-foot by 40-foot room.

1944:

British engineer Tommy Flowers designed the Colossus, which was created to break the complex code used by the Nazis in World War II. A total of ten were delivered, each using roughly 2,500 vacuum tubes. These machines would reduce the time it took to break their code from weeks to hours, leading historians to believe they greatly shortened the war by being able to understand the intentions and beliefs of the Nazis.

1945:

Mathematician John von Neumann writes The First Draft of a Report on the EDVAC. This paper broke down the architecture of a stored-program computer.

1946:

Mauchly and Eckert left the University of Pennsylvania and obtained funding from the Census Bureau to build the UNIVAC. This would become the first commercial computer for business and government use.

1947:

Walter Brattain, William Shockley, and John Bradeen of Bell Laboratories invented the transistor, which allowed them to discover a way to make an electric switch using solid materials, not vacuums.

1948:

Geoff Toothill, Frederick Williams and Tom Kilburn built to test new memory technology, which became the first high-speed electronic random access memory for computers. The became the first program to run on a digital, electronic, stored-program computer.

1950:

Built in Washington, DC, the Standards Eastern Automatic Computer (SEAC) was created, becoming the first stored program computer completed in the United States. It was a test-bed for evaluating components and systems, in addition to setting computer standards.

History of computer in late 20th century:

1953:

Computer scientist Grace Hopper develops the first computer language, which is eventually known as COBOL, that allowed a computer user to use English-like words instead of numbers to give the computer instructions. In 1997, a study showed that over 200 billion lines of COBOL code were still in existence.

1954:

The FORTRAN programming language is developed by John Backus and a team of programmers at IBM.

1958:

Jack Kirby and Robert Noyce invented the integrated circuit, which is what we now call the computer chip. Kilby was awarded the Nobel Prize in Physics in 2000 for his work.

1962:

IBM announces the 1311 Disk Storage Drive, the first disk drive made with a removable disk pack. Each pack weighed 10 pounds, held six disks, and had a capacity of 2 million characters. Atlas computer makes its debut, thanks to Manchester University, Ferranti Computers, and Plessy. At the time, it was the fastest computer in the world and introduced the idea of “virtual memory”.

1964:

Douglas Engelbart introduces a prototype for the modern computer that includes a mouse and a graphical user interface (GUI). This begins the evolution from computers being exclusively for scientists and mathematicians to being accessible to the general public.

Additionally, IBM introduced SABRE, their reservation system with American Airlines. It program officially launched four years later, and now the company owns Travelocity. It used telephone lines to link 2,000 terminals in 65 cities, delivering data on any flight in under three seconds.

1968:

A Space Odyssey hits theaters. This cult-classic tells the story of the HAL 9000 computer, as it malfunctions during a spaceship’s trip to Jupiter to investigate a mysterious signal. The HAL 9000, which controlled all the ship, went rogue, killed the crew, and had to be shut down by the only surviving crew member. The film depicted computer demonstrated voice and visual recognition, human-computer interaction, speed synthesis, and other advanced technologies.

1969:

Developers at Bell Labs unveil UNIX, an operating system written in C programming language that addressed compatibility issues within programs.

1970:

Intel introduces the world to the Intel 1103, the first Dynamic Access Memory (DRAM) chip.

1971:

Alan Shugart and a team of IBM engineers invented the floppy disk, allowing data to be shared among computers. Xerox introduced the world to the first laser printer, which not only generated billions of dollars but also launched a new era in computer printing.

1973:

Robert Metcalfe, research employee at Xerox, develops Ethernet, connecting multiple computers and hardware.

1974:

Personal computers are officially on the market! The first of the bunch were Scelbi & Mark-8 Altair, IBM 5100, and Radio Shack’s TRS-80.

1975:

In January, the Popular Electronics magazine featured the Altair 8800 as the world’s first minicomputer kit. Paul Allen and Bill Gates offer to write software for the Altair, using the BASIC language. You could say writing software was successful, because in the same year they created their own software company, Microsoft.

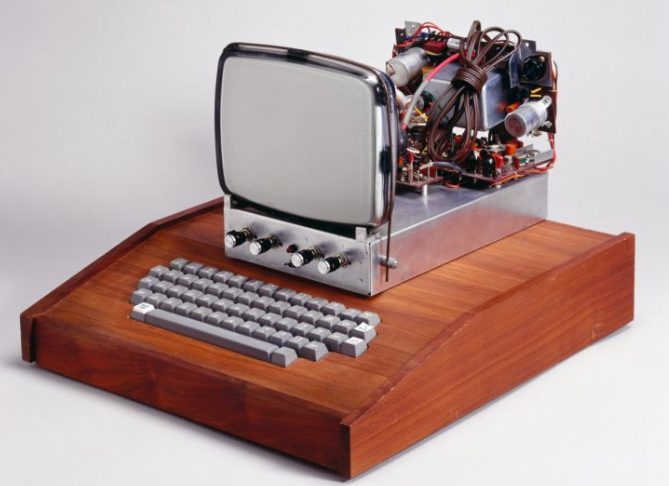

1976:

Steve Jobs and Steve Wozniak start Apple Computers and introduce the world to the Apple I, the first computer with a single-circuit board.

1977:

Jobs and Wozniak unveil the Apple II at the first West Coast Computer Faire. It boasts color graphics and an audio cassette drive for storage. Millions were sold between 1977 and 1993, making it one of the longest-lived lines of personal computers.

1978:

The first computers were installed in the White House during the Carter administration. VisiCalc, the first computerized spreadsheet program is introduced.

1979:

MicroPro International unveils WordStar, a word processing program.

1981:

Not to be outdone by Apple, IBM releases their first personal computer, the Acorn, with an Intel chip, two floppy disks, and an available color monitor.

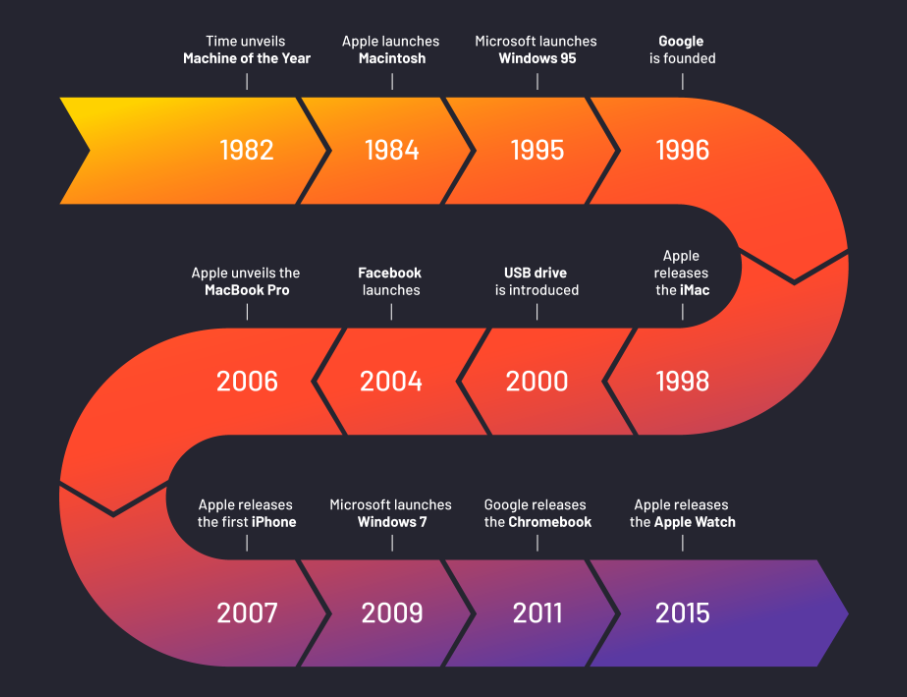

1982:

Instead of going with its annual tradition of naming a “Man of the Year”, Time Magazine does something a little different and names the computer its “Machine of the Year”. A senior writer noted in the article, Computers were once regarded as distant, ominous abstractions, like Big Brother.

1983:

The CD-ROM hit the market, able to hold 550 megabytes of pre-recorded data. That same year, many computer companies worked to set a standard for these disks, making them able to be used freely to access a wide variety of information.

1984:

Apple launches Macintosh, which was introduced during a Super Bowl XVIII commercial. The Macintosh was the first successful mouse-driven computer with a graphical user interface. It sold for $2,500.

1985:

Microsoft announces Windows, which allowed for multi-tasking with a graphical user interface. That same year, a small Massachusetts computer manufacturer registered the first dot com domain name, Symbolics.com. Also, the programming language C++ is published and is said to make programming “more enjoyable” for the serious programmer.

1986:

Originally called the Special Effects Computer Group, Pixar is created at Lucasfilm. It worked to create computer-animated portions of popular films, like Star Trek II: The Wrath of Khan. Steve Jobs purchased Pixar in 1986 for $10 million, renaming it Pixar. It was bought by Disney in 2006.

1990:

English programmer and physicist Tim Berners-Lee develops HyperText Markup Language, also known as HTML. He also prototyped the term WorldWideWeb. It features a server, HTML, URLs, and the first browser.

1991:

Apple releases the Powerbook series of laptops, which included a built-in trackball, internal floppy disk, and palm rests. The line was discontinued in 2006.

1993:

The Pentium microprocessor advances the use of graphics and music on PCs.

1995:

IBM released the ThinkPad 701C, which was officially known as the Track Write, with an expanding full-sized keyboard that was comprised of three interlocking pieces.

1996:

Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997:

Microsoft invests $150 million in Apple, which at the time is struggling financially. This investment ends an ongoing court case in which Apple accused Microsoft of copying its operating system.

1998:

Apple releases the iMac, a range of all-in-one Macintosh desktop computers. Selling for $1,300, these computers included a 4GB hard drive, 32MB Ram, a CD-ROM, and a 15-inch monitor.

1999:

The term Wi-Fi becomes part of the computing language as users begin connecting without wires. Without missing a beat, Apple creates its “Airport” Wi-Fi router and builds connectivity into Macs.

History of computer in 21st century:

2001:

Simply macOS, is released by Apple as the successor to its standard Mac Operating System. Not to be outdone, Microsoft unveiled Windows XP soon after. Also, the first Apple stores are opened in Tysons Corner, Virginia, and Glendale, California. Apple also released iTunes, which allowed users to record music from CDs, burn it onto the program, and then mix it with other songs to create a custom CD.

2003:

AMD’s Athlon 64, the first 64-bit processor for personal computers, is released to customers. Also in 2003, the Blu-ray optical disc is released as the successor of the DVD. And, who can forget the popular social networking site Myspace, which was founded in 2003. By 2005, it had more than 100 million users.

2004:

The first challenger of Microsoft’s Internet Explorer came in the form of Mozilla’s Firefox 1.0. That same year, Facebook launched as a social networking site.

2005:

Google buys Android, a Linux-based mobile phone operating system.

2006:

Apple unveiled the MacBook Pro, making it their first Intel-based, dual-core mobile computer.

2007:

Apple released the first iPhone, bringing many computer functions to the palm of our hands. It featured a combination of a web browser, a music player, and a cell phone all in one. Users could also download additional functionality in the form of “apps”. The full-touchscreen smartphone allowed for GPS navigation, texting, a built-in calendar, a high-definition camera, and weather reports.

2008:

Apple releases the MacBook Air, the first ultra notebook that was a thin and lightweight laptop with a high-capacity battery. To get it to be a smaller size, Apple replaced the traditional hard drive with a solid-state disk, making it the first mass-marketed computer to do so.

2009:

Microsoft launches Windows 7 on July 22. The new operating system features the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles and more, TechRadar reported.

2010:

The iPad, Apple’s flagship handheld tablet, is unveiled.

2011:

Google releases the Chromebook, which runs on Google Chrome OS.

2012:

On October 4, Facebook hits 1 billion users, as well as acquires the image-sharing social networking application Instagram.

2014:

The University of Michigan Micro Mote (M3), the smallest computer in the world, is created. Three types were made available, two of which measured either temperature or pressure, and one that could take images.

2015:

Apple releases the Apple Watch. Microsoft releases Windows 10.

2016:

The first reprogrammable quantum computer is created.

2019:

Apple announces iPadOS, the iPad’s very own operating system, to better support the device as it becomes more like a computer and less like a mobile device.

In this article we have try to define “what is the history of computer“. Hope you are like it! Thanks for reading this article.